Cindy’s in a major Omega campaign, Naomi’s back on the catwalk and Donatella broke the internet with her reunion of the ‘famous five’ for the Versace SS18 show. As the original supers stalk back into the spotlight, here’s our edit of the best models of all time.

Introduction by Autumn Whitefield-Madrano

If you were old enough to reach a newsagent counter in 1990, you’ll remember that cover: Naomi, Linda, Tatjana, Christy and Cindy, high-glam action figures clustered together, gazing at us on the front of January Vogue. Even those of us who fancied ourselves too lofty for supermodel daydreams were entranced by Peter Lindbergh’s black and white photo, elevated from the realm of fashion and approaching something closer to cinema. It was the image that kicked off the era of supermodel mania. If you weren’t old enough the first time round, you’ll definitely remember a certain Instagram-breaking moment last September – a similarly Amazonian line-up of Cindy, Claudia, Naomi, Carla and Helena, reunited on the Versace SS18 catwalk. The ‘supermodel’ has come full circle – quite literally in the case of these returning stars, whose stock has never been higher than now.

The original gang, a bit like the Spice Girls, had someone for everyone. Naomi Campbell: assertive, playful yet exquisite, the obvious icon not only for young black women, but for those who coveted her haughty ‘I’m worth it’ chutzpah. Linda Evangelista: chameleon-like with her ever-changing hair colour, yet always unmistakable, and admired for the gumption of saying she wouldn’t get out of bed for less than $10,000 a day. Christy Turlington, with her calm doe-eyed gaze and impossibly perfect features that looked carved from soapstone. Cindy Crawford and Tatjana Patitz, the smiling, can-do faces of athletic vigour on each side of the pond. That cover was shot more than a quarter of a century ago, yet ask most people to name a supermodel, and chances are at least one member of this quintet will roll instantly off the tongue.

Not that they were the first to be termed ‘supermodels’. The word has been bandied about since 1891, when painter Henry Stacy Marks rhapsodised about a certain variety of sitter: ‘The “super” model… goes in for theatrical effect; always has an expression of “Ha! Ha! More blood…”’ With each person dubbed a ‘supermodel’ over the 75 years it took for the term to take hold, the profession took form. To be a supermodel is to be an entity larger than that of fashion plate: Lisa Fonssagrives, a Swede widely considered to be the very first of the type, is perhaps known as such because she leveraged her modelling streak of the 40s into a fashion-design career. You also need to be instantly recognisable, to have a Cindy Crawford-mole USP, if you will: when Dorian Leigh – another candidate for the world’s first supermodel – met legendary editor Diana Vreeland, Mrs V told her, ‘Do not – do not do anything to those eyebrows!’ Perhaps it’s about celebrity (Twiggy prompted The New York Times’ first use of the word, in 1968), or an unshakeable self-belief (Janice Dickinson claimed she coined the term in 1979, which is patently untrue).

If the 1990 quintet were the first graduating class of modern supermodels, Kate Moss was its first rogue scholar. She seized the public eye in a singular fashion: she was unhealthily skinny, the story went, and then there was that whole ‘heroin chic’ thing. Plus, she didn’t care, or at least she didn’t look like she did. That deadpan expression, that flatness echoed by the broad planes of her face – teenage girls who couldn’t disguise that they cared desperately about boys, good grades and being liked were probably infuriated by her insouciance, consoling themselves by silently thinking, ‘She isn’t even that pretty.’

It’s this, I now see, that made her a supermodel. Kate Moss is no gargoyle; she doesn’t even qualify as jolie laide. But her face was the next logical step from the template that her supermodel elders had etched. Evangelista et al were jaw-droppingly beautiful, none of them dabbled in plebeian prettiness. Kate, with her much-mentioned (in model terms) shortness, ‘bandy’ legs and gappy smile, had the same magnetism and uniqueness they all share. Mere beauty isn’t what set them apart. To earn the ‘super’ prefix, a model doesn’t have to be the most beautiful model nor does she have to be the most commercially successful. She has to have that ineffable quality known as ‘It’, even as her It-ness is conferred upon a supermodel largely after her christening. She has to be comfortable with that, inhabit it and use it to her advantage. ‘There was nothing like these girls,’ says Sasha Charnin Morrison, who witnessed the rise of the supermodel during her 30-plus years in fashion publishing, including stints as fashion director at Harper’s Bazaar, Allure and Us Weekly. ‘When they said things like, “I don’t get out of bed for less than $10,000 a day,” that made complete sense. They were worth every penny.’

And they were, for a while. The predecessor to the model was the mannequin; models were referred to as ‘live mannequins’ before we settled upon our current term. A model’s job is to wear clothes, to be dressed, styled and passively done unto. The supermodels turned this on its head – the essence of feminism is the ability to have a choice, and these women were the ones doing the choosing. They were in control of their bodies, careers and the clothes they agreed to wear – and they sold them. It is less about possessing beauty and more about possessing a ‘look’– one that can shift depending on circumstance and styling, of course. A model asks that you look at her as a part of the whole – the fashion, the make-up, the hair, the mise-en-scène. A supermodel asks no such thing; she demands, and in doing so makes it clear that it is her essence that should remain indelible in your mind.

Which was, of course, their downfall. By 1998, Time had declared the death of the supermodel, with good reason. Moss walked for Alexander McQueen in spring 1997; a year and a half later, his showstopping Joan of Arc show featured relative nobodies. Designers wanted their creations to take centre stage, and magazine covers used actresses when they wanted recognition, and lesser-known models when they wanted the face of ‘relatability’ – the idea being that readers better project their own identity on to a blank canvas, unaccompanied by the overwhelming individuality of a Cindy or a Naomi.

Today’s supermodels may not have inherited their predecessors’ iconic status as models, but their DNA carries other imprints: business acumen (Cindy founded an empire on fitness videos before YouTube was a twinkle in Steve Chen’s eye), brand relationships, distinct yet pliant identities. While the originals were made into stars by endless press coverage, social media has allowed Gigi, Bella and Kendall to create stars of themselves. They aren’t at the mercy of the press for their image; they create their own personae, controlling what to reveal and what to conceal. With this ability to generate mystery, the new supermodel has mastered the catch-22 of It-ness, along with the business savvy that goes with the title. What remains to be seen is whether they will develop the quality that sees a quorum of the Vogue five working into their 50s – the ability to compel. When you look at Naomi Campbell lounging on a bed, gazing into the camera with a languid tease, you see light. Perhaps you envy her, but your deeper envy belongs to the photographer, able to watch her transcendence from behind the safety of the lens. You can’t tear your eyes away.

Scroll down for our best models ever hall of fame…

1. Suzy Parker

(Image credit: REX/Shutterstock)

Era: Late 1940s — early 1960s From: New York Her look: Freckly and flame-haired with an hourglass figure USP: A favourite muse of photographer Richard Avedon, her sister Dorian was a famous model, dubbed the ‘world’s first supermodel’. That was before the world met Suzy. She went on to eclipse her sis, becoming the first model to earn $100,000 per year and the only one to have an (unreleased) Beatles song named after her

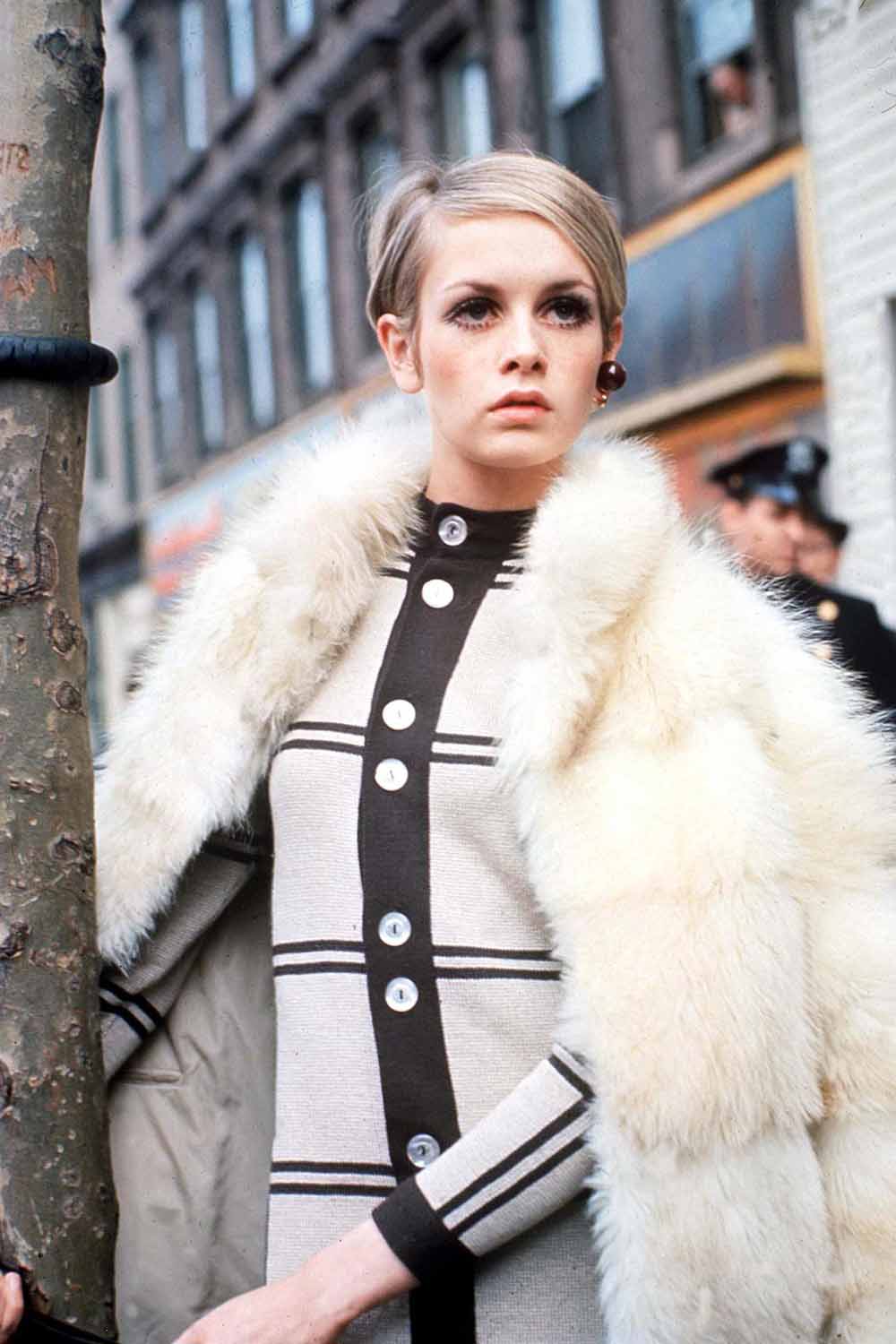

2. Twiggy (AKA Lesley Hornby)

(Image credit: REX/Shutterstock)

Era: 1960s From: Neasden, North-West London Her look: Bambi — after a few weeks of no dinner. Big eyes, spidery lashes and a skinny twig-like frame (hence the nickname). USP: Invented the Swinging Sixties — with a little help from the Beatles, Carnaby Street, et al. Discovered at 16 when she had her hair chopped off in hairdresser Leonard’s West End salon — a fashion journalist saw the pictures, and the rest is history.

3. Veruschka

(Image credit: Alinari/REX/Shutterstock)

Era: 1960s From: Kaliningrad, Russia. Her look: A lionine mane of blonde hair and the kind of versatile face that could take any look — fake ‘eyes’ on her eyelids, being covered in gold leaf… You name it, she pulled it off USP: Her blue blood and fascinating family. Real name: Vera Gottliebe Anna Gräfin von Lehndorff-Steinort. Her mother was a Prussian countess and her father was a German count who was an active member of the Resistance — he was eventually executed for an assassination attempt on Adolf Hitler.

4. Penelope Tree

(Image credit: Daily Mail/REX/Shutterstock)

Era: 1960s From: UK Her look: Saucer eyes, spiky lashes and poker-straight hair. ‘An Egyptian Jimminy Cricket’ according to her then-boyfriend, cheeky chappie photographer David Bailey. USP: Super-connected socialite roots — brought up in the US, her mother was an American socialite descended from famous social activist Endicott Peabody. Her British MP dad threatened to sue if her first-ever photos (by legendary photographer Diane Arbus) were published.

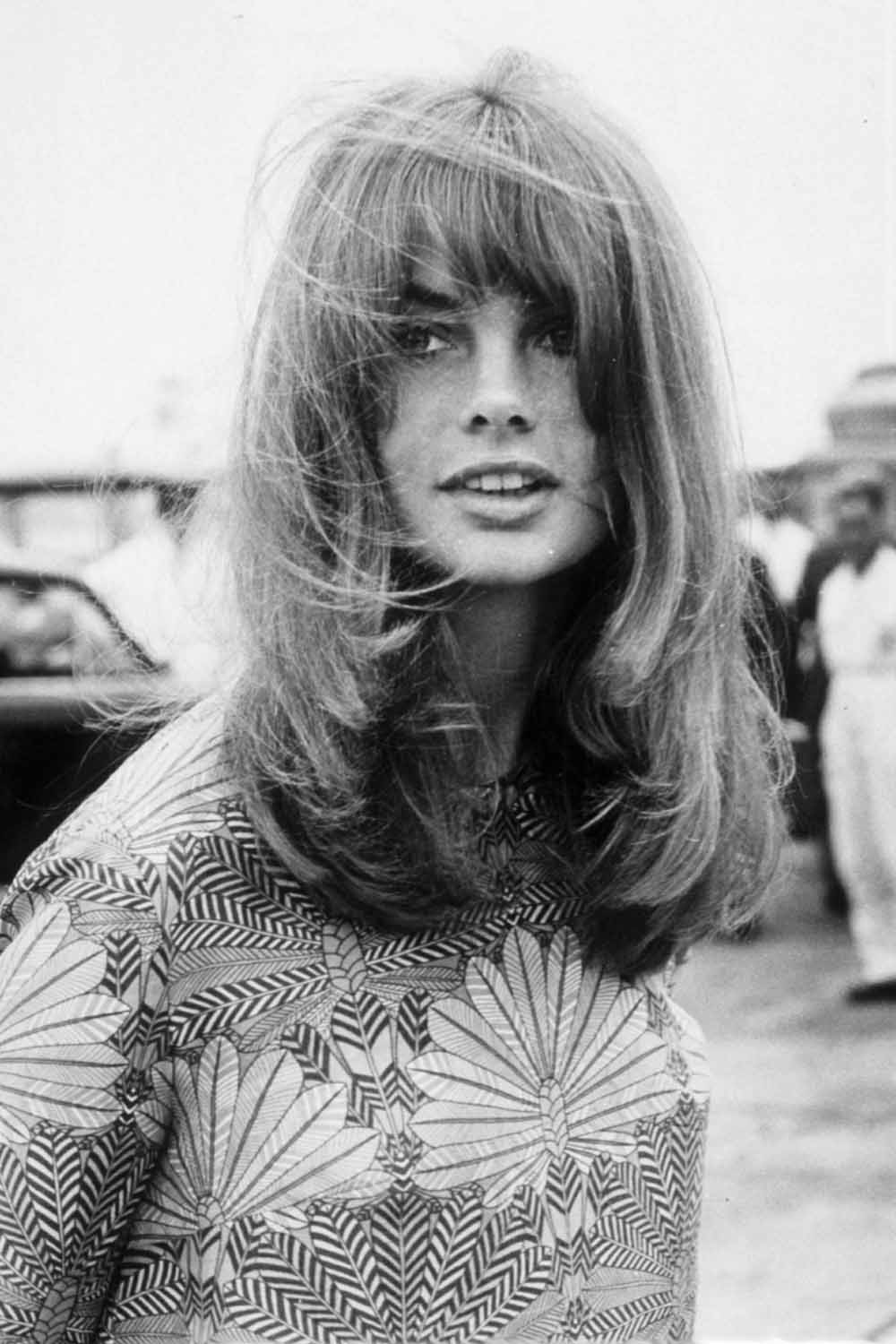

5. Jean Shrimpton (AKA ‘The Shrimp’)

(Image credit: REX/Shutterstock)

Era: 1960s From: High Wycombe, Buckinghamshire Her look: Delicate, snub-nosed perfection USP: Yet another David Bailey conquest, she started her affair with him when he was still married to his first wife, who he divorced to be with Shrimpton. Described as having ‘the world’s most beautiful face’, everything she wore (even barely visible in a beauty campaign) was always a sell-out

6. Pat Cleveland

(Image credit: Evening Standar/REX/Shutterstock)

Era: 1960s-Present From: New York Her look: European-style beauty with a free-flowing mane of black hair USP: One of the most famous black models to break through fashion’s exclusionary policies of the 60s, she was named ‘the all-time superstar model’ by former US Vogue editor, André Leon Talley. Modelling for designers from Valentino to Yves Saint Laurent, it was on the runway where she really established her fame, bringing her own theatrical style. She moved to Paris in 1970, refusing to return to the US until 1974, the year Vogue first featured a black model on their cover.

7. Lauren Hutton

(Image credit: GLOBE/REX/Shutterstock)

Era: 1970s-present. Yup, she’s still going, modelling for The Row, Club Monaco and jewellery brand Alexis Bittar in recent years From: Charleston, South Carolina Her look: Two words: gap teeth. After trying to disguise the gap with mortician’s wax, she eventually refused to have them ‘fixed’, heralding a new era of healthy body image and natural imagery in modelling USP: The first model to score a big-bucks beauty contract, negotiating $400,000 to be the ‘face’ of Revlon in 1973. Appeared on the cover of Vogue a record 41 times. Not forgetting her eight-page nude magazine photoshoot — at the age of 61. 8. Beverly Johnson

Era: 1970s

From: Buffalo, New York

Her look: Chisel-jawed glamour

USP: Credited with forcing fashion to take black women seriously, she was the first African-American model to appear on the cover of US Vogue in 1974 and ditto French Elle in 1975. Also an actress, she’s appeared in everything from Law & Order to Sabrina, the Teenage Witch in recent years

9. Christie Brinkley

(Image credit: REX/Shutterstock)

Era: 1970s and 1980s From: Michigan, USA Her look: Cookie-cutter California Girl personified (she was brought up in LA) — toned and tanned with a perma-grin USP: She had the longest-running beauty contract of any model, ever — 25 years as the face of Cover Girl. Along with 500 magazine covers, including 3 consecutive Sports Illustrated swimwear issue covers — quite the coup in the 1980s. Also: a spell as Mrs Billy Joel.

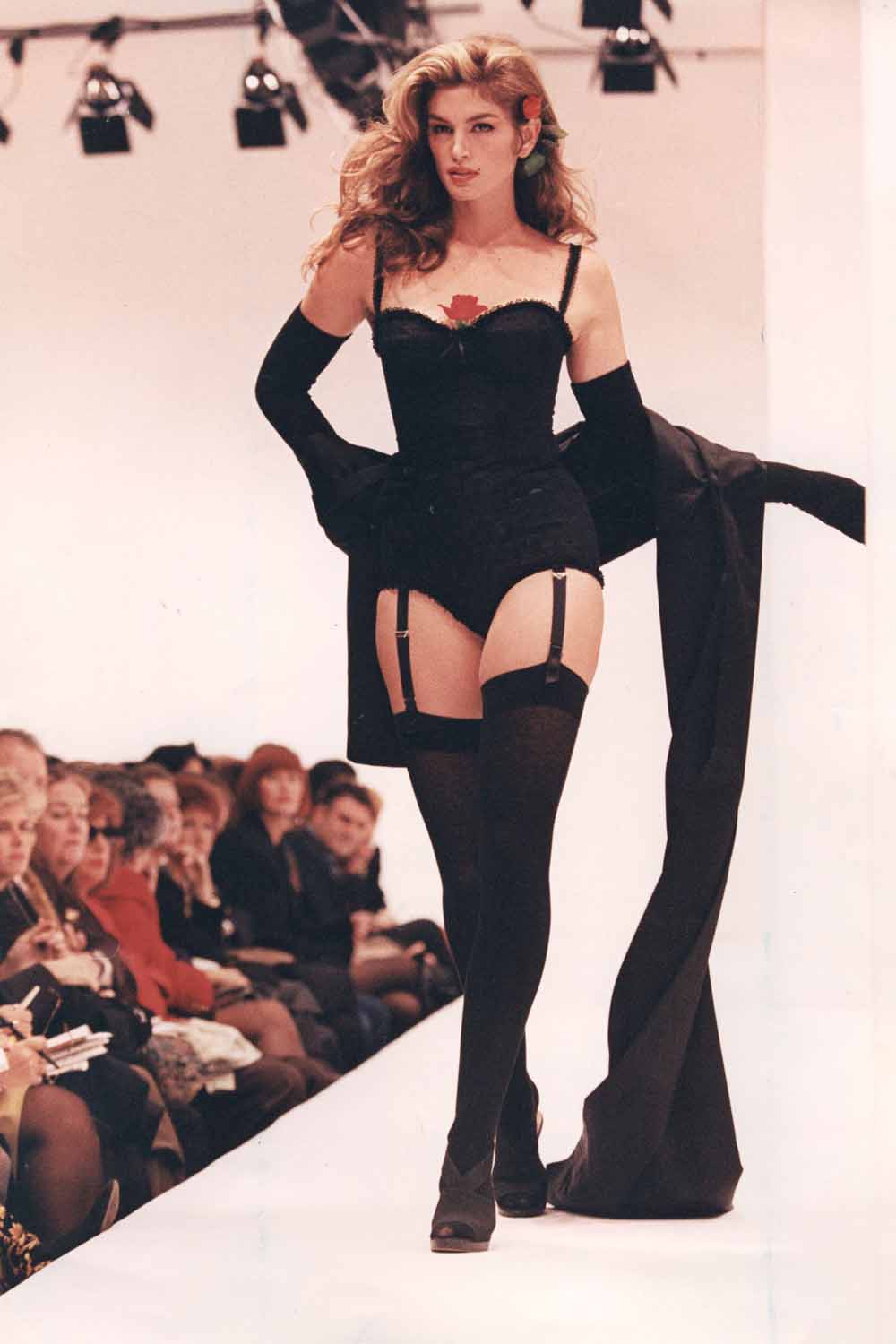

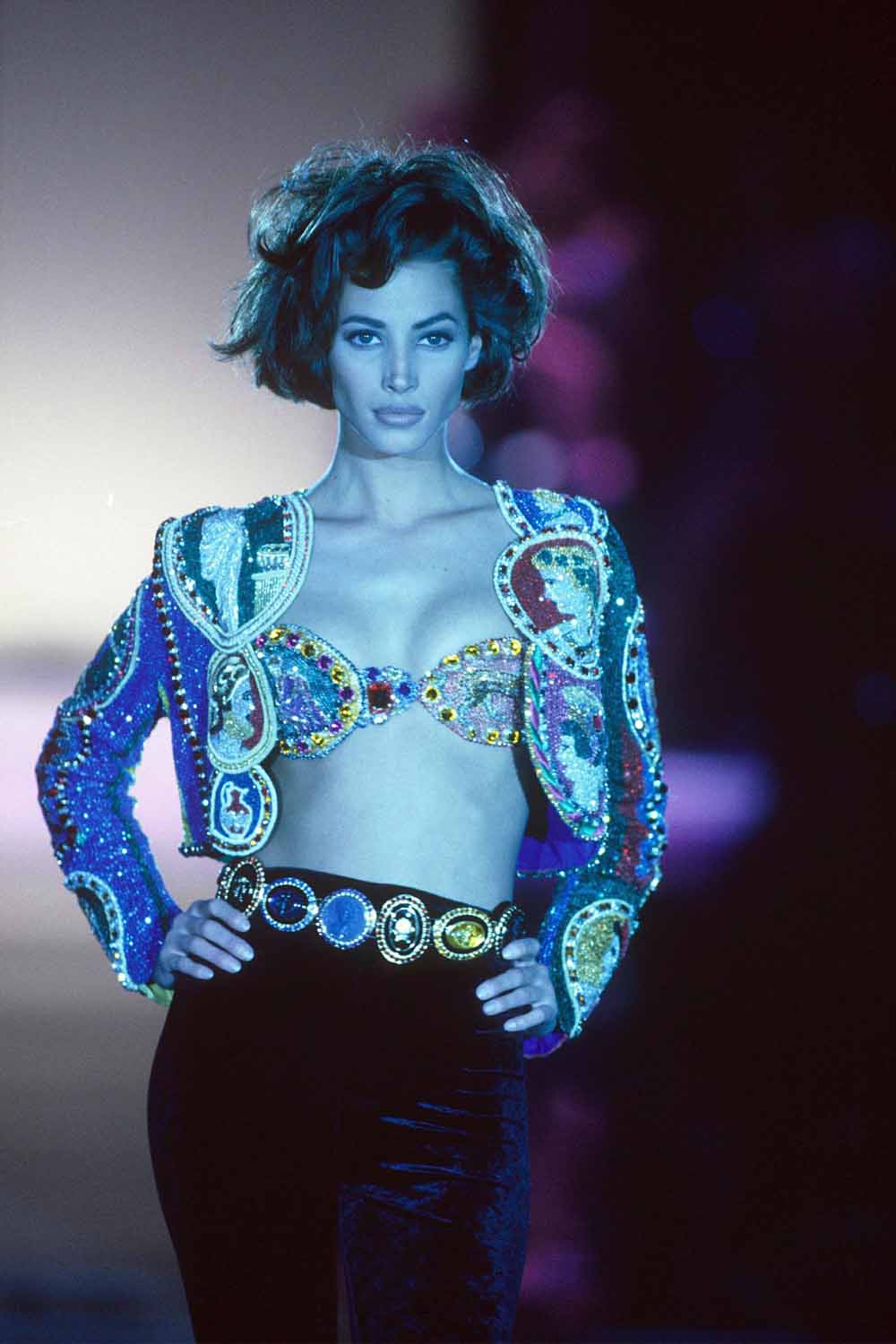

10. Cindy Crawford

(Image credit: Daily Mail/REX/Shutterstock)

Era: 1980s and 1990s From: Illinois, USA Her look: Big hair, strong brows, athletic body. Hang on, have we forgotten something? Oh yes. The most famous mole OF ALL TIME. USP: Brains and business acumen. She nearly ended up as a chemical engineer and invented the celebrity workout video with her famous ‘Cindy Crawford: Shape Your Body’ exercise series, raking in a fortune in the process.

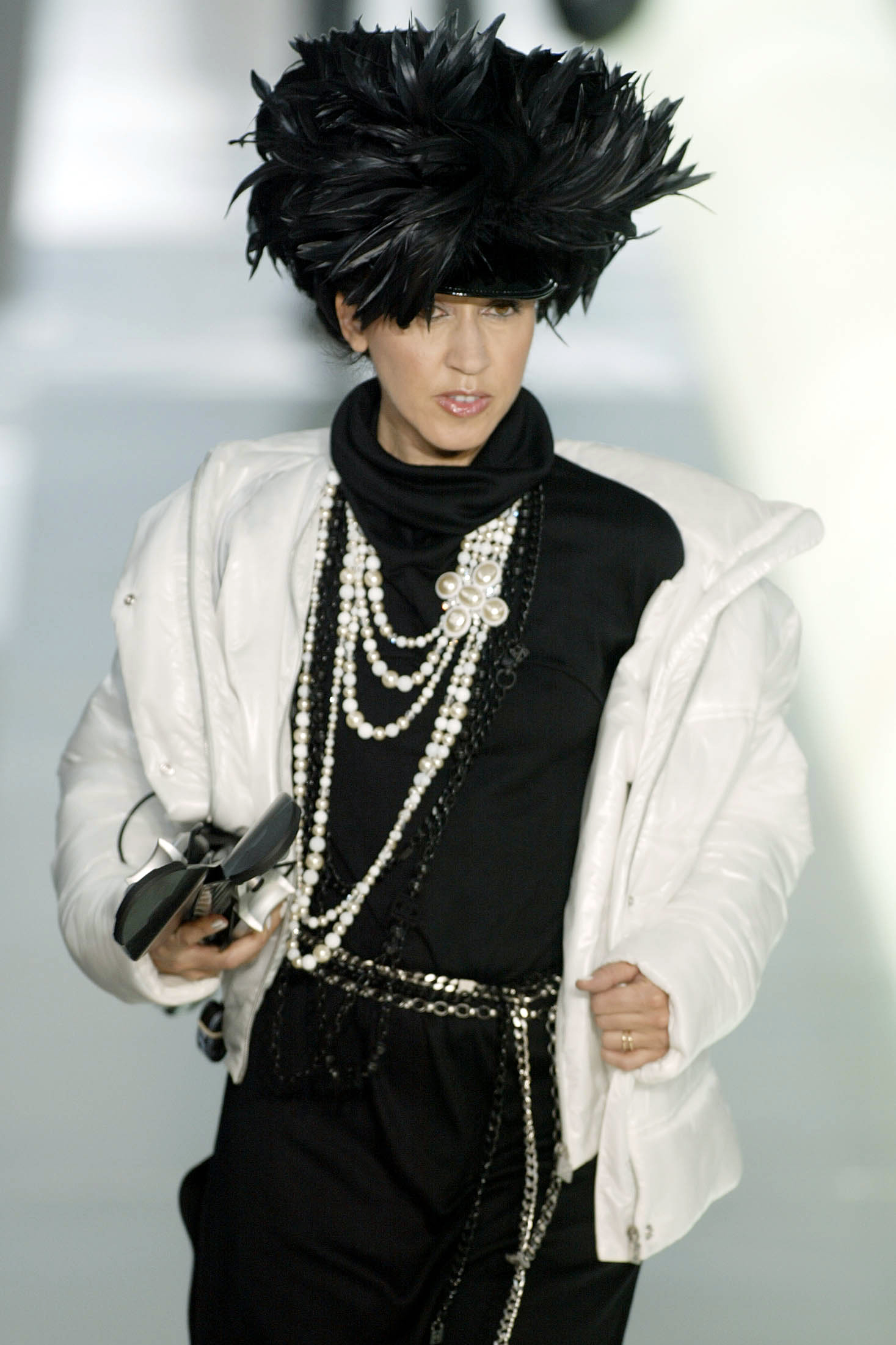

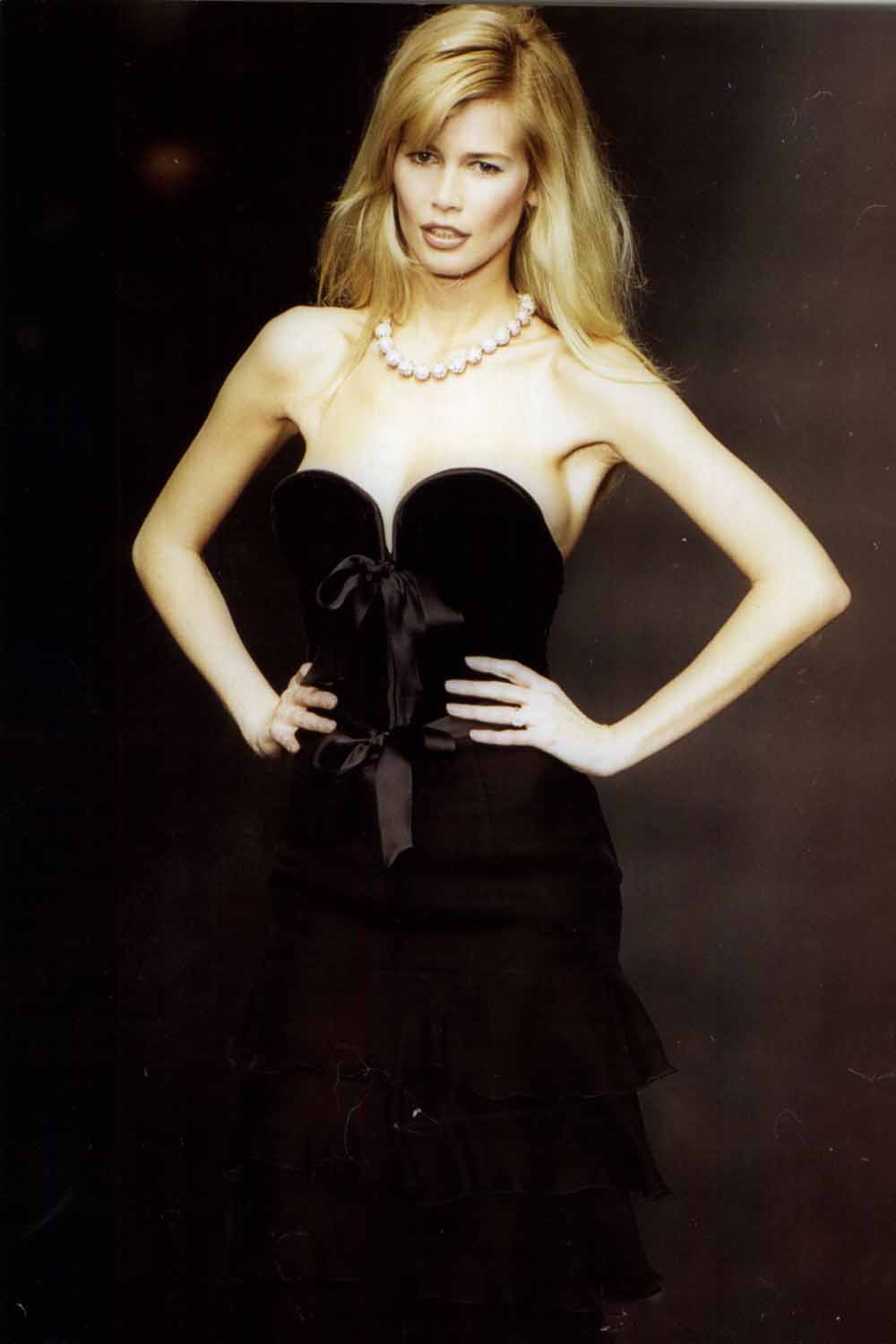

11. Claudia Schiffer

(Image credit: Ken Towner/ANL/REX/Shutterstock)

Era: 1980s and 1990s From: Rheinberg, Germany Her look: Teutonic Brigitte Bardot USP: Holds the world record for most amount of magazine covers, as listed in the Guinness Book of World Records. Was hand-selected by Karl Lagerfeld for his Chanel campaigns and remained one of his favourites for years. But what can top being ‘guillotined’ by permatanned magician David Copperfield (her fiance from 1994-1999) live on stage?

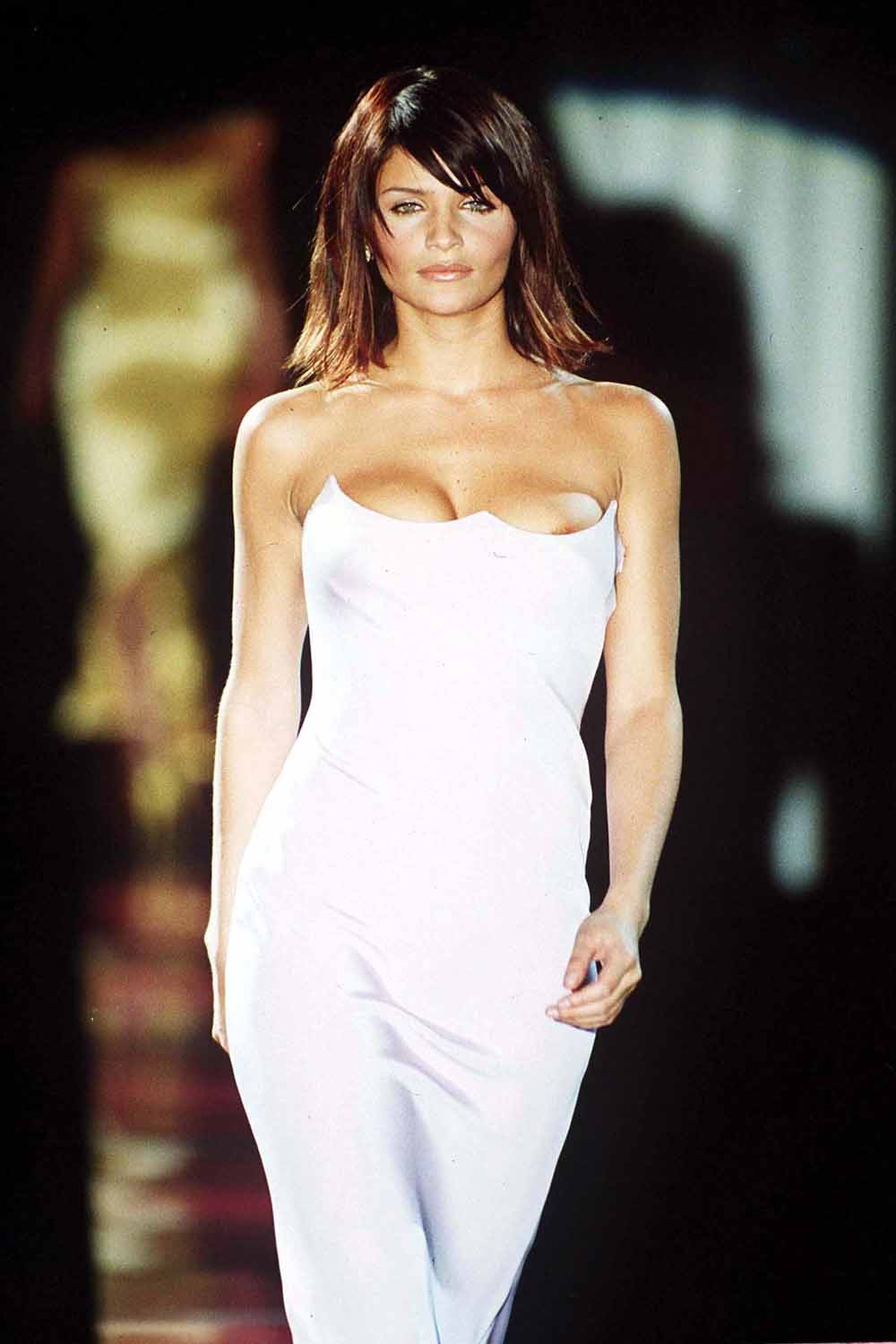

12. Helena Christensen

(Image credit: Steve Wood/REX/Shutterstock)

Era: 1980s-present From: Copenhagen, Denmark Her look: Enigmatic and olive-skinned, thanks to her mixed Danish/Peruvian heritage USP: Former Miss Universe Denmark with serious creative skills. She wanted to be a musician and has run her own fashion boutiques (including Butik in New York) and clothing lines. A successful photographer, she was the launch creative director of Nylon magazine, and has held exhibitions of her work

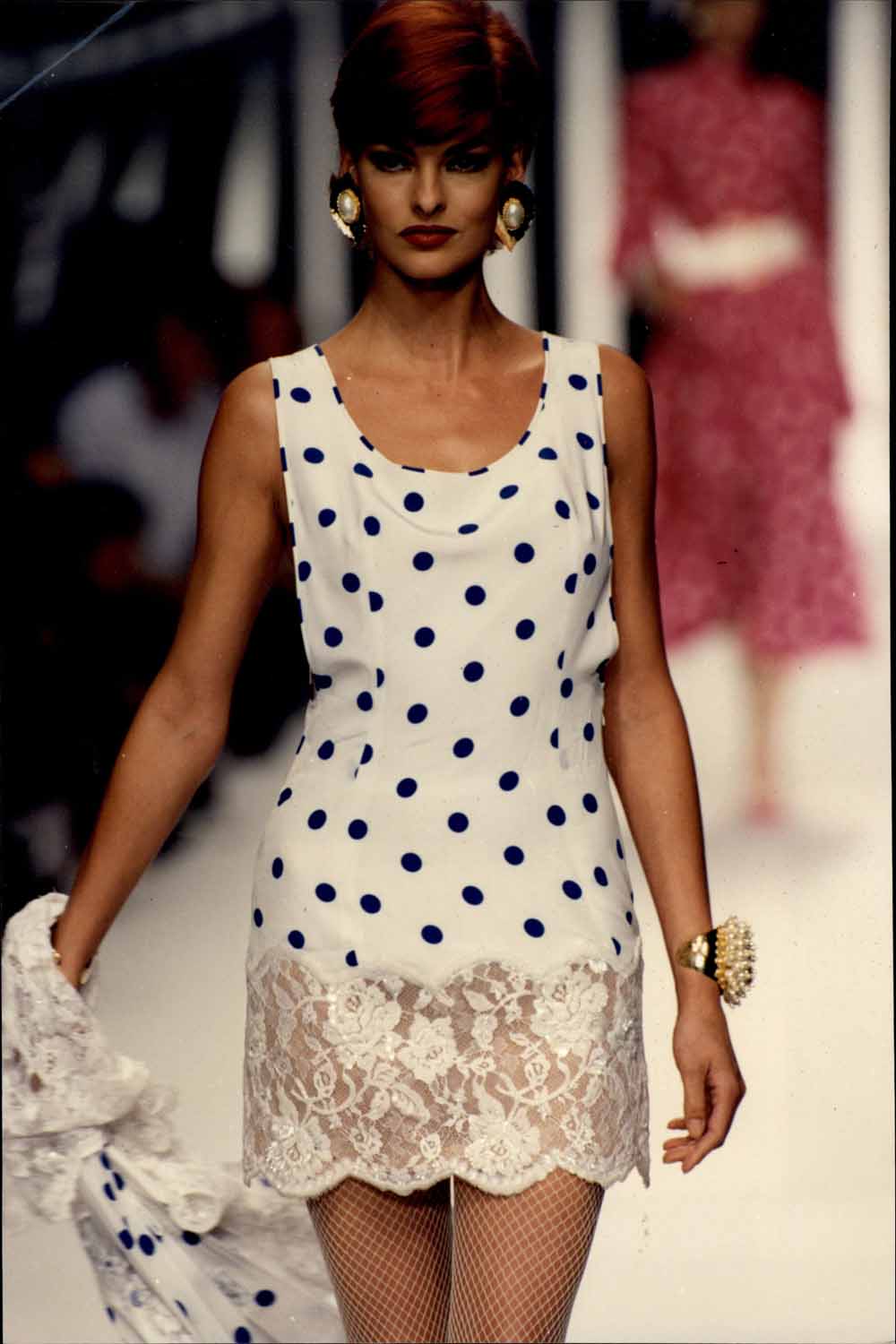

13. Linda Evangelista

(Image credit: Ken Towner/ANL/REX/Shutterstock)

Era: 1980s-present From: Ontario, Canada Her look: Hawk-nosed chameleon, with ever-changing hair. Long before ‘The Rachel’, it was all about ‘The Linda’ — her 1989 gamine crop that became famous the world over, inspiring wigs and other celebrities (hello, Demi Moore in Ghost). USP: The feisty models’ model. It was she who uttered the infamous phrase, ‘We don’t wake up for less than $10,000 a day,’ referring to her and her fellow supermodel gang. She’s credited with setting a new benchmark for models’ fees and caused controversy by reportedly earning sky-high sums for walking in catwalk shows for the big houses.

14. Kristen McMenamy

(Image credit: David Fisher/REX/Shutterstock)

Era: 1980s-presentFrom: Pennsylvania, USAHer look: Other-worldly androgynous. Legendary model agent Eileen Ford advised her to have cosmetic surgery — luckily, she pressed on with her unique lookUSP: Ushered in the era of grunge in 1992. Some might say she actually started it, by chopping her long red hair off and letting Francois Nars shave her eyebrows for an Anna Sui catwalk show. Cue international recognition and a starring role in fashion shoots with titles like ‘Grunge & Glory.’ Combined with her theatrical poses and runway ‘walk’, she’s still your go-to girl for high-fashion drama. 15. Christy Turlington

(Image credit: Paul Massey/REX/Shutterstock)

Era: 1980s-present From: California (her mother is from El Salvador), USA Her look: Doe-eyed serenity — the embodiment of Calvin Klein’s fragrance Eternity, one of her most famous campaigns. USP: The Zen One. Having launched her own Ayurvedic skincare line and yoga range, she’s now a prominent campaigner for maternal health in the developing world. She studied for a masters’ degree in public health and launched Every Mother Counts, a non-profit organisation that supports maternal health programmes in countries including Malawi, Uganda, and Indonesia, as well as directing a 2010 documentary on the subject, No Woman, No Cry

16. Elle Macpherson

Era: 1980s-present

From: Sydney, Australia

Her Look: Athletic girl-next-door with surfboard abs

USP: She’s graced the cover of a record five Sports Illustrated Swimsuit issues, earning herself the nickname ‘The Body’. She had a ‘Body’-off with Heidi Klum after Klum referred to herself using the same nickname in an advertisement for a Victoria’s Secret bra. Savvy businesswoman to boot, she’s made millions with her lingerie line Elle Macpherson Intimates and was also head judge of Britain & Ireland’s Next Top Model for three years from 2010.

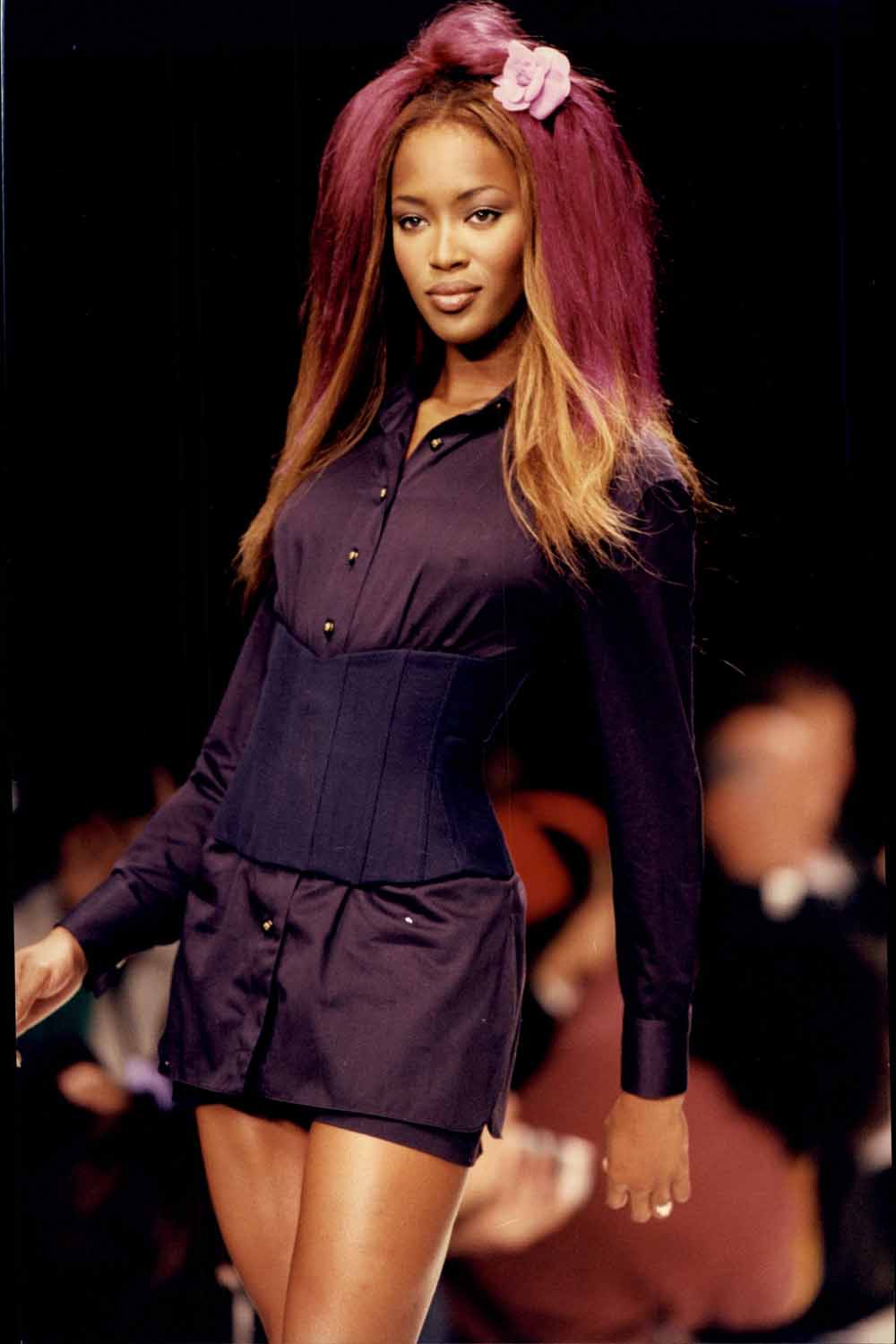

17. Naomi Campbell

(Image credit: Ken Towner/ANL/REX/Shutterstock)

Era: 1980s-present From: Streatham, South London Her look: Perfectly symmetrical with pillowy pout and serious (some might say ferocious) attitude. USP: Where shall we start? The good: The first black model to appear on the cover of US Vogue’s key September issue (in 1989) Forced the fashion industry to up their bookings of black girls (her pal Yves Saint Laurent threatened to pull his advertising if they didn’t) Starred in a Bob Marley music video at age 7 Took fashion’s most famous tumble on a pair of Vivienne Westwood mega-platforms Was called ‘honorary granddaughter’ by Nelson Mandela Has worked non-stop for charity — including raising £4.5 million (and counting) for disaster relief through her own Fashion For Relief foundation The bad: Um. Convincted of assault four times and accused of various forms of assault or abuse by no less than 9 employees and associates Was forced to appear in a 2010 war crimes trial against former Liberian president Charles Taylor after apparently receiving a ‘blood diamond’ from him. She denied any knowledge.18. Kate Moss

(Image credit: CHARLES SYKES/REX/Shutterstock)

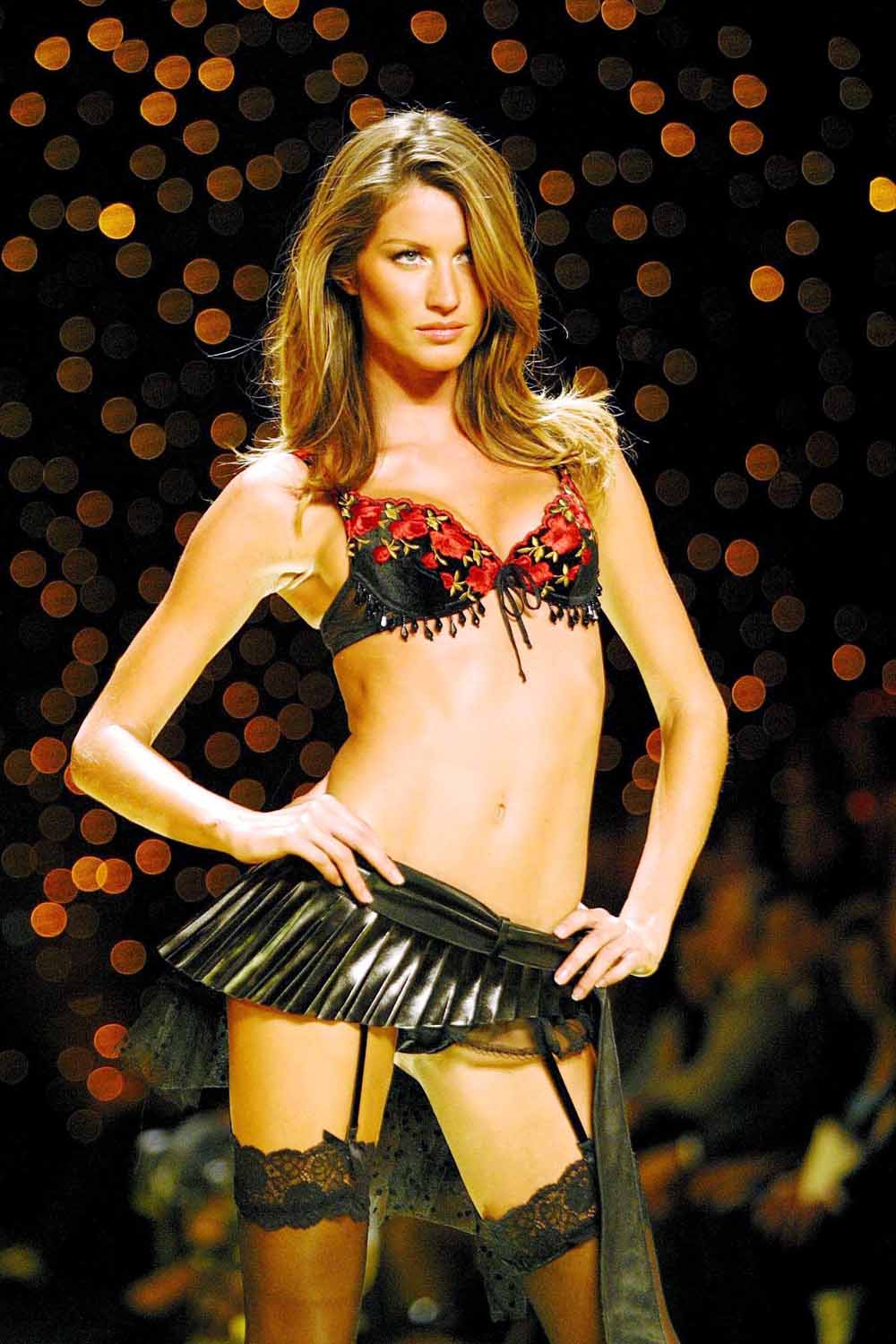

Era: 1980s-present From: Croydon, South London Her look: The Game-Changer: ‘short’ (5’7″), slightly bow-legged, gap-toothed, freckly… The anti-glamazon. USP: The ultimate muse. She rarely speaks, but that’s OK because all other significant cultural voices do it for her. An 18-carat statue of her worth £1.5m created in 2008 for the British Museum was the largest gold statue created since Ancient Egyptian times, a painting of her by Lucian Freud was sold for £3.9 million in 2005, and she’s inspired every fashion photographer from Corinne Day to Mario Testino. Not to mention musicians, including her exes Pete Doherty and Jamie Hince. We won’t start on her actual style. If it wasn’t for her, would ‘Get The Look’ fashion pages even exist?19. Gisele Bundchen

(Image credit: Startraks Photo/REX/Shutterstock)

Era: 1990s-present From: Southern Brazil. Her look: Brazilian bombshell who brought the sexy back. Sunkissed hair, sunkissed limbs, and a special Zoolander-tastic stride known as the ‘horse walk’ — knees up, feet kicking out in front. USP: She’s been the highest-paid model in the world every year since 2004. She wore the most expensive lingerie ever created in the 2000 Victoria’s Secret show (the ‘Red Hot Fantasy Bra’ worth $15 million) and she’s chalked up over 350 ad campaigns and 1,200 magazine covers.

20. Natalia Vodianova

(Image credit: Richard Young/REX/Shutterstock)

Era: 1990s-present From: Gorky, Soviet Union (now Russia) Her look: Dreamy innocent with piercing blue eyes USP: Rags to riches tale following a heartbreaking early start — born into extreme poverty and with a disabled half-sister, she sold fruit on the street as a child to help family finances, before ending up as Viscountess Portman after marrying English property heir Justin Portman. Now goes out with equally illustrious Antoine Arnault, son of LVMH founder Bernard Arnault, and is a mother of four. Yes, four. And she can still shimmy into an Italian sample-size catwalk look 21. Erin O’Connor

(Image credit: Evening Standar/REX/Shutterstock)

Era: 1990s-present From: West Midlands Her look: Unconventional, angular and aristocratic USP: The favourite muse of fashion’s great creatives, including Alexander McQueen (where she played a madwoman trapped in a glass cage for one of his early shows, Lunatic Asylum), John Galliano and Jean Paul Gaultier, who ‘discovered’ her. One of the few living people to appear on a Royal Mail postage stamp, part of a specially-commissioned set shot by photographer Nick Knight.

22. Alek Wek

(Image credit: Wood/REX/Shutterstock)

Era: 1995-Present From: Wau, South Sudan Her look: Strong, unique and exotic USP: Dark-skinned models were almost unheard of in the high fashion industry before Wek came along. After being scouted by Models 1 age 14 she became a huge influence on changing beauty ideals and went on to grace the covers of Elle, i-D, Glamour and Cosmopolitan, as well as appearing in editorials for Vogue. She fled her native Sudan in 1991 to escape the civil war and now devotes time to work with UNICEF, Doctors Without Borders and World Vision.

23. Agyness Deyn

(Image credit: Richard Young/REX/Shutterstock)

Era: 2000s From: Greater Manchester Her look: Punky bleached crop and down-to-earth, non-model ‘walk’. USP: Best mates with teenage pal Henry Holland, it’s fair to say she helped launch his career while he helped launched hers. Her first job was in a chip shop in Rossendale. Now a bona fide serious actress, starring in West End play The Leisure Society and films including Electricity (2013) and Patient Zero (2015)

24. Jessica Stam

(Image credit: Charles Sykes/REX/Shutterstock)

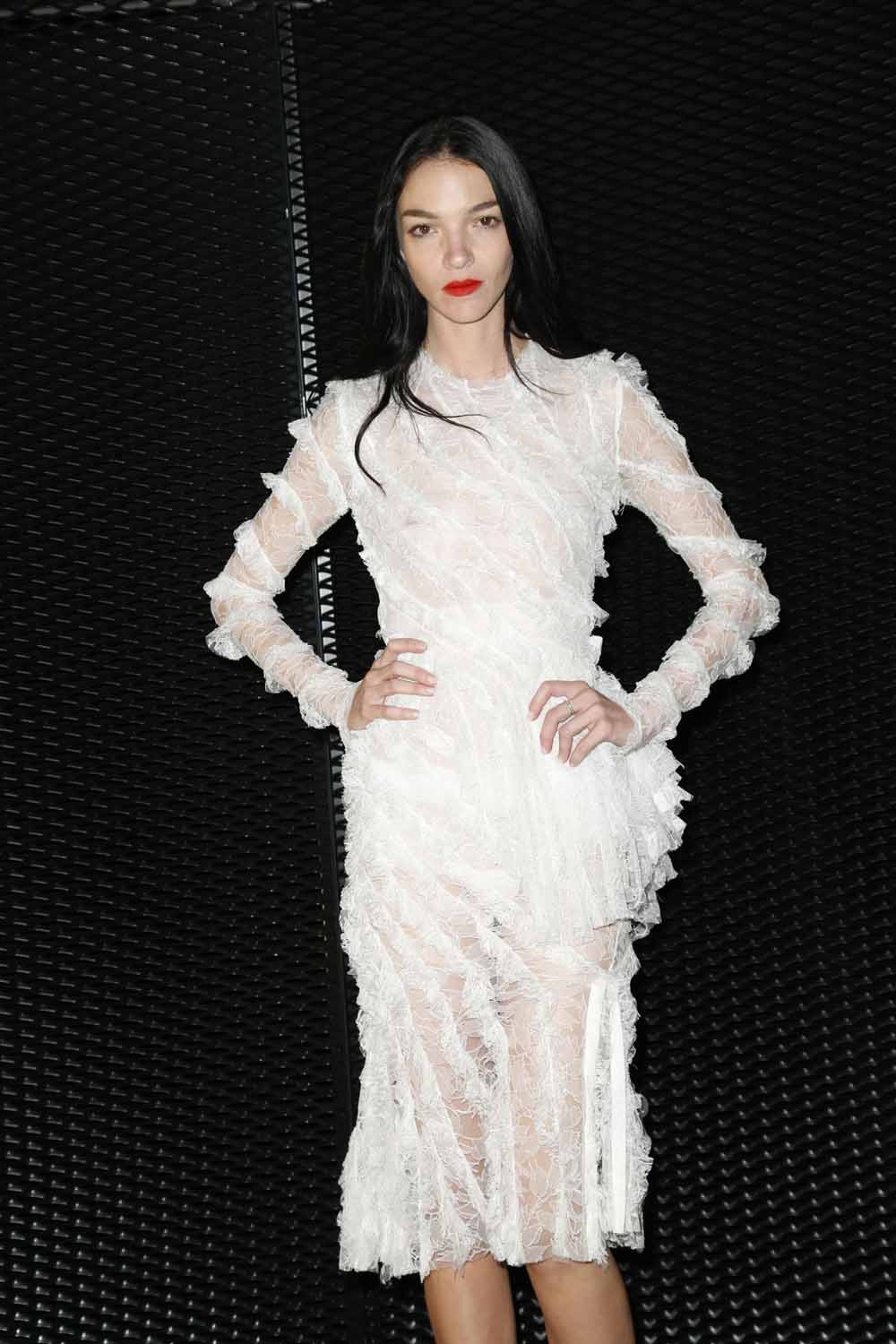

Era: 2000s-present From: Ontario, Canada Her look: Elfin porcelain-doll pretty, with feline eyes USP: She inspired one of the original It bags, the Marc Jacobs ‘Stam’ — a quilted, ladylike frame bag — in 2005. Demand was so high, waiting lists had to be closed. A glammer claim to fame than being the world’s most beautiful dentist — her original career plan. 25. Mariacarla Boscono

(Image credit: WWD/REX/Shutterstock)

Era: 2000s From: Rome (with a well-travelled childhood featuring spells in Florida, Italy and Kenya) Her look: Black-haired, pale-skinned Sicilian-widow glamour USP: Has appeared in the Pirelli calendar 3 times. She’s earned a place in fashion history as long-running muse to Givenchy’s Riccardo Tisci — she’s basically the human embodiment of his dark, Latin-infused aesthetic.

26. Daria Werbowy

(Image credit: David Fisher/REX/Shutterstock)

Era: 2000s From: Krakow, Poland (she grew up in Canada) Her look: Tawny, leggy, panther-like grace USP: A true fashion models’ model, she holds the all-time record for opening and closing the most shows in a single season. Her long, lithe limbs and cool, natural beauty have been seen in everything from Balmain (the image of her in then-designer Christophe Decarnin’s 2010 collection with its ripped khaki vests, sharp-shouldered military jackets and skinny leather jeans sparked a thousand copycats) to Vogue Paris shoots galore.

27. Liu Wen

Era: 2000s From: Hunan, China

Her look: Delicate features topped off with sweet dimples

USP: Dubbed ‘the first Chinese supermodel’ by reductionists everywhere, it can’t be denied that she’s done a lot to bridge the gap between East and West as far as fashion’s concerned. The first East-Asian model to star in the Victoria’s Secret show, the first East-Asian spokesmodel for Estée Lauder and the first Asian model to make Forbes magazine’s annual highest-paid model ranking. Also riveting: she claims she’s never had a boyfriend. 28. Cara Delevingne

(Image credit: Jim Smeal/BEI/BEI/Shutterstock)

Era: 2010s From: West London Her look: Angel face with beetle brows USP: Aristo with attitude. Impressive array of comedy facial expressions (tongue-out selfie, anyone?) and ‘don’t give a ****’ approach. Her family’s posher than a princess (relatives include a lady-in-waiting to Princess Margaret, baronets and viscounts aplenty and two Lord Mayors of London) but she’s not shy about her undercut or indeed about being bisexual, currently dating female singer St Vincent.29. Edie Campbell

(Image credit: Startraks Photo/REX/Shutterstock)

Era: 2010s From: Westbourne Grove, West London Her look: Unique combo of haughty and kooky — sullen stare, fine features and punky crop USP: A talented jockey, she won the first-ever ladies charity race, The Magnolia Cup, at Goodwood in 2011. No wonder Burberry have snapped her up to convey classy Brit cool personified in ad campaigns galore. 30. Jourdan Dunn

(Image credit: Startraks Photo/REX/Shutterstock)

Era: 2000s-present From: Greenford, West London Her look: Razor-sharp cheekbones and a sultry, sleepy gaze USP: In 2008, she was (sadly) the first black model to walk in a Prada show for over a decade. Now a poster girl for diversity in modelling, causing controversy when she posted on social media that she’d been rejected from a Dior couture show for her boobs and not her skin colour, which she said is what ‘usually happens’. Also a role model for young single mums everywhere — she had her son, Riley, at 19, and has spoken about his battle with sickle-cell anaemia.

31. Kendall Jenner

(Image credit: SIPA/REX/Shutterstock)

Era: 2010s-present From: LA Her look: High-fashion version of the Kardashian LookTM USP: When you book Kendall, you book access to 35 MILLION devoted social media followers. Yup, she was definitely paying attention when Kris ‘n’ Kim were working out the global domination master plan. Google have named her as the second most-searched-for model in the world, and Adweek claimed she generates $236,000 for a single Tweet. The Instagirl generation has arrived.

Modeling is not an easy thing to do and for somebody who is not really interested in what models do ,I may not even be clear what these models do. Somebody may be wondering what they gain by walking on their toes as people watch them. Somebody like that may not even imagine that some of the models earn more than some of the famous people they know. However, not every model is lucky enough to collect these lots of money as it requires particular attributes to do that. All the same, here are the top 10 highest paid models in 2020.

The model must be able to grab every single opportunity they come across and know how to couple these opportunities with great deals. If the model does these two things in the right way then it may just open both doors and windows for cash to start flowing in. Stories have been told about some models who came from very humble backgrounds but latter become filthy rich just because of modeling. If you have a passion for modeling then I will definitely encourage you to follow your dream. It may not just end up being your full-time job but also well rewarding. I invite you to look at the top ten highest paid models in the whole word. These people are filthy rich!

Table of Contents

- 10. Miranda Kerr

- 8. Candice Swanepoel

- 7. Cara Delevingne

- 6. Rosie Huntington-Whiteley

- 5. Gigi Hadid

- 4. Kendall Jenner

- 3. Karlie Kloss

- 2. Adriana Lima

- 1. Gisele Bündchen

10. Miranda Kerr

-

$6,000,000

At the age of thirty four, this model has managed to secure regular payments of up to six million dollars. I mean so many people spend a lot of hours in offices but still they cannot even imagine such amount of money in their bank accounts. Her high paying contracts with Escada fragrance and Wonderba have seen her appear in this list of top earning models this year. The Australian supper model also happens to own a series of glass ware, a cosmetic line and also Kora organics. By my standards I would say that she is really doing well.

At a tender age of thirteen, Kerr had already got herself into the modeling industry. She also happens to be the first Australian to take part in Victoria’s secret campaign and that was in the year two thousand and seven. The events around the year 1997 that saw her make away with victories at nationwide model search which was organized by Impulse fragrances and Dolly Magazine would be considered the turning point for this model as she got her first break through.

However, her winning did not just come alone, it come with controversies surrounding the effects of modeling and fashion on girls of her age at that time. Other than being featured in this list, Kerr is also ranked among the best most admired women. Among many other things, she is also an author of a self help book and owns Kora Organics which happens to be her own brand of organic skin care. I think if you want to be a model you can use a few tips from her but that is just what I think because inspiration is key, even before you get the tips you have to be inspired.

9. Liu Wen

-

$7,000,000

Now this one is interesting because at some point I thought that for you to earn good money if you are a Chinese then you have to find yourself featured in a kung –fu movie but Liu Wen must have proved me wrong. Anyway I still have to confirm if she is not featured in one of such movies. All that put aside, this model’s face and gorgeous body can tell it all. She has a way of coupling her beauty with steady contracts that always see her smiling her way to the bank.

One of the contracts that has seen featured in this list is the contract with La Perla , a high end clothing brand. In addition to that she also has another contract with Estee Launder. This has made hare one of the highest earning Chinese citizens and a very popular lady in the Chinese fashion model

8. Candice Swanepoel

-

$7,100,000

This is another model who has also managed to appear in the list of the most beautiful women on earth. She is a unique model in that she actually cares for her people and this is evident from the support she gives to Mother2Mother, a charity organization that works towards eradication of HIV among the women and children of Africa. This South African diva has mastered the skill of balancing time between her contracts. She currently has three known contracts. Her contracts with Maxfactor, Biotherm and Victoria Secret seem to be well rewarding.

Her career in modeling began when she was lucky to be adopted by a model scout at the age of fifteen. If you have been keen you will realize that she has appeared in several covers some of which include Lush, GQ, Harper’s Bazaar, I-D, Elle among others. In addition to that she is also not a new face in advertising. She has done advertisements for Christian Dior, Jean Paul Gaultier and Jason Wu just to mention but a few.

7. Cara Delevingne

-

$8,500,000

At number seven is not just a model but also a big name in the film industry. I know many did not expect her to be in this list however, Cara Jocelyn Delevingne with a massive following of over thirty four million people on Instagram may have just found a way to making millions making her one of the biggest earners of the year. If you did not know her well allow be to let you know that she is not just a model but also an actress and a singer too. Her history in modeling is rather interesting. Can you imagine that she actually dropped out of school at the age of ten to go into modeling! It paid off anyway. She has been able to win the model of the year awards not once but twice in the years 2014 and 2012 at the British Fashion Awards.

6. Rosie Huntington-Whiteley

-

$9,000,000

I actually did not believe that modeling can also be inborn until I come across this beautiful English model. She is a perfect example of natural talent. It is this talent that makes her carries home a clean nine million dollars. And did I mention that she is feature among the most beautiful English models and I actually think that is one of the reasons she has gained popularity. You can catch her on Instagram @rosiehw and prove to yourself her lifestyle given what she posts that features high-end living.

5. Gigi Hadid

-

$9,000,000

I am tempted to say that models are at their best when they are are in their twenties. This is another young model who is earning big from the industry. In a normal situation, I would say that nine million dollars is too much money to be earned by a twenty two years old lady. However, because of this model, I am willing to change my stand to accommodate extra ordinary cases like hers. Her deals with Topshop, Tommy Hilfiger, Maybelline and BMW may be attributed to her featuring in this list. Moreover, if you do not know her well then you might just be surprised to know that she also appears in The Top 50 models @ models .com

As if that is not enough, the British Fashion Council in the year two thousand and sixteen saw it wise to name Gigi Hadid the International Model of the Year. I would say that she is very lucky to have what many models out there would wish to have and if it is not luck then I do not know what it is.

4. Kendall Jenner

-

$10,000,000

Did you ever imagine that the most beautiful ladies in the earth are also the highest income earners in the word? I guess your answer is a big no but i want to assure you that you are not alone in this because most people I have come across actually know nothing about modeling. However, those who know and understand modeling would know that beautiful ladies in the modeling industry are also among the millionaire list.

In the word today, Kendall Jenner is ranked not only in the list of highest paid models but also among the most beautiful girls in the planet earth. She is a perfect example of a multitalented model with ballet skill to add to her modeling. She is actually among the few models who posses ballet skills. In fact, I have never heard of any other model with such skills. If you are among the Instagram fans then I guess you already know that she is one of the most followed persons on that social media platform. If you are not already following her then check her @kendallienner.

3. Karlie Kloss

-

$10,000,000

There is also a breed of models that couple modeling with entrepreneurship. Karlie Kloss is just one of them. With an estimate earning of ten million dollars, she manages to secure a third position on the list of the higher paid models. She managed to retain the America’s Secret Angel for four consecutive years from the year two thousand and eleven to the year two thousand and fourteen. Besides, she is also ranked among the most beautiful models in America.

2. Adriana Lima

-

$10,500,000

Lima is the longest running angel in the history of Victoria Secret. She has what many of the models in the world would like to have. She is considered a role model by many beginners in the industry. This did not just come easy, from as early as the year two thousand, she was already the spokes model for a renowned cosmetics company named Maybelline cosmetics, a position that she has been holding since then to date. That is not the only contract that she has managed to keep for such a long period of time. Other companies that she has worked with include Kia Motors Commercials and Super Bowl with which she worked with from the year two thousand and three to the year two thousand and nine.

As a result of holding the record of longest running angel in the history of Victoria Secret, she has come to be popularly known as Victoria’s Secret Angel. The most astonishing part of her story is when she first thought of being a model. You will agree with me that some people up to collage level before they know what they really want to do with their lives. That was not the case with this super model; on the contrary she was able to identify her talent while still in elementary school and if you think she waited to be an adult before starting to grab awards, then you are wrong, she was crowed a beauty pageant while in elementary school, an award that motivated her to be what she is today.

1. Gisele Bündchen

-

$30,500,000

At number one is Gisele Bundchen. This one never seizes to amaze me; in fact it looks like riches decided to follow her to retirement. Despite having retired from the modeling industry, her name can still not miss in the list of the top highest paid models in the world and from the look of things she is set to continue featuring in this list. For the past ten years, she has maintained the top slot in the list of super rich models. As a result, she has been named the QUEEN of Supermodels.

This Brazilian model is not just a model, beyond that; she is a producer and an actress. Her modeling star started to shine in the 1990s and she is also a major contributor to the end of heroin chic modeling era in 1999. To some people she is the only remaining true and original supermodel today. Among her achievements is the invention of the “horse walk”. We cannot also ignore the fact that she was actually part of the Victoria’s Secret Angels from the year two thousand to mid two thousand and seven. She also played a movie role in the Devil Wear Prada (2006) and also Taxi (2004).

Conclusion

Modeling in this century is a profession that many would envy since it is evident that it is well paying. However, it requires a lot of determination and self sacrifice to climb to the top because for you to be at the top, you have to be among the best.

Related

-

Facebook

-

Twitterc

-

Google+

-

Pinterest

UPDATE: We have published the updated research summaries of the Top 6 NLP Language Models Transforming AI in 2023.

The introduction of transfer learning and pretrained language models in natural language processing (NLP) pushed forward the limits of language understanding and generation. Transfer learning and applying transformers to different downstream NLP tasks have become the main trend of the latest research advances.

At the same time, there is a controversy in the NLP community regarding the research value of the huge pretrained language models occupying the leaderboards. While lots of AI experts agree with Anna Rogers’s statement that getting state-of-the-art results just by using more data and computing power is not research news, other NLP opinion leaders point out some positive moments in the current trend, like, for example, the possibility of seeing the fundamental limitations of the current paradigm.

Anyway, the latest improvements in NLP language models seem to be driven not only by the massive boosts in computing capacity but also by the discovery of ingenious ways to lighten models while maintaining high performance.

To help you stay up to date with the latest breakthroughs in language modeling, we’ve summarized research papers featuring the key language models introduced during the last few years.

Subscribe to our AI Research mailing list at the bottom of this article to be alerted when we release new summaries.

If you’d like to skip around, here are the papers we featured:

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- GPT2: Language Models Are Unsupervised Multitask Learners

- XLNet: Generalized Autoregressive Pretraining for Language Understanding

- RoBERTa: A Robustly Optimized BERT Pretraining Approach

- ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

- T5: Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

- GPT3: Language Models Are Few-Shot Learners

- ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

- DeBERTa: Decoding-enhanced BERT with Disentangled Attention

- PaLM: Scaling Language Modeling with Pathways

Important Pretrained Language Models

1. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, by Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova

Original Abstract

We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers. As a result, the pre-trained BERT representations can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of tasks, such as question answering and language inference, without substantial task-specific architecture modifications.

BERT is conceptually simple and empirically powerful. It obtains new state-of-the-art results on eleven natural language processing tasks, including pushing the GLUE benchmark to 80.4% (7.6% absolute improvement), MultiNLI accuracy to 86.7 (5.6% absolute improvement) and the SQuAD v1.1 question answering Test F1 to 93.2 (1.5% absolute improvement), outperforming human performance by 2.0%.

Our Summary

A Google AI team presents a new cutting-edge model for Natural Language Processing (NLP) – BERT, or Bidirectional Encoder Representations from Transformers. Its design allows the model to consider the context from both the left and the right sides of each word. While being conceptually simple, BERT obtains new state-of-the-art results on eleven NLP tasks, including question answering, named entity recognition and other tasks related to general language understanding.

What’s the core idea of this paper?

- Training a deep bidirectional model by randomly masking a percentage of input tokens – thus, avoiding cycles where words can indirectly “see themselves”.

- Also pre-training a sentence relationship model by building a simple binary classification task to predict whether sentence B immediately follows sentence A, thus allowing BERT to better understand relationships between sentences.

- Training a very big model (24 Transformer blocks, 1024-hidden, 340M parameters) with lots of data (3.3 billion word corpus).

What’s the key achievement?

- Advancing the state-of-the-art for 11 NLP tasks, including:

- getting a GLUE score of 80.4%, which is 7.6% of absolute improvement from the previous best result;

- achieving 93.2% accuracy on SQuAD 1.1 and outperforming human performance by 2%.

- Suggesting a pre-trained model, which doesn’t require any substantial architecture modifications to be applied to specific NLP tasks.

What does the AI community think?

- BERT model marks a new era of NLP.

- In a nutshell, two unsupervised tasks together (“fill in the blank” and “does sentence B comes after sentence A?” ) provide great results for many NLP tasks.

- Pre-training of language models becomes a new standard.

What are future research areas?

- Testing the method on a wider range of tasks.

- Investigating the linguistic phenomena that may or may not be captured by BERT.

What are possible business applications?

- BERT may assist businesses with a wide range of NLP problems, including:

- chatbots for better customer experience;

- analysis of customer reviews;

- the search for relevant information, etc.

Where can you get implementation code?

- Google Research has released an official Github repository with Tensorflow code and pre-trained models for BERT.

- PyTorch implementation of BERT is also available on GitHub.

2. Language Models Are Unsupervised Multitask Learners, by Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, Ilya Sutskever

Original Abstract

Natural language processing tasks, such as question answering, machine translation, reading comprehension, and summarization, are typically approached with supervised learning on task-specific datasets. We demonstrate that language models begin to learn these tasks without any explicit supervision when trained on a new dataset of millions of webpages called WebText. When conditioned on a document plus questions, the answers generated by the language model reach 55 F1 on the CoQA dataset – matching or exceeding the performance of 3 out of 4 baseline systems without using the 127,000+ training examples. The capacity of the language model is essential to the success of zero-shot task transfer and increasing it improves performance in a log-linear fashion across tasks. Our largest model, GPT-2, is a 1.5B parameter Transformer that achieves state of the art results on 7 out of 8 tested language modeling datasets in a zero-shot setting but still underfits WebText. Samples from the model reflect these improvements and contain coherent paragraphs of text. These findings suggest a promising path towards building language processing systems which learn to perform tasks from their naturally occurring demonstrations.

Our Summary

In this paper, the OpenAI team demonstrates that pre-trained language models can be used to solve downstream tasks without any parameter or architecture modifications. They have trained a very big model, a 1.5B-parameter Transformer, on a large and diverse dataset that contains text scraped from 45 million webpages. The model generates coherent paragraphs of text and achieves promising, competitive or state-of-the-art results on a wide variety of tasks.

What’s the core idea of this paper?

- Training the language model on the large and diverse dataset:

- selecting webpages that have been curated/filtered by humans;

- cleaning and de-duplicating the texts, and removing all Wikipedia documents to minimize overlapping of training and test sets;

- using the resulting WebText dataset with slightly over 8 million documents for a total of 40 GB of text.

- Using a byte-level version of Byte Pair Encoding (BPE) for input representation.

- Building a very big Transformer-based model, GPT-2:

- the largest model includes 1542M parameters and 48 layers;

- the model mainly follows the OpenAI GPT model with few modifications (i.e., expanding vocabulary and context size, modifying initialization etc.).

What’s the key achievement?

- Getting state-of-the-art results on 7 out of 8 tested language modeling datasets.

- Showing quite promising results in commonsense reasoning, question answering, reading comprehension, and translation.

- Generating coherent texts, for example, a news article about the discovery of talking unicorns.

What does the AI community think?

- “The researchers built an interesting dataset, applying now-standard tools and yielding an impressive model.” – Zachary C. Lipton, an assistant professor at Carnegie Mellon University.

What are future research areas?

- Investigating fine-tuning on benchmarks such as decaNLP and GLUE to see whether the huge dataset and capacity of GPT-2 can overcome the inefficiencies of BERT’s unidirectional representations.

What are possible business applications?

- In terms of practical applications, the performance of the GPT-2 model without any fine-tuning is far from usable but it shows a very promising research direction.

Where can you get implementation code?

- Initially, OpenAI decided to release only a smaller version of GPT-2 with 117M parameters. The decision not to release larger models was taken “due to concerns about large language models being used to generate deceptive, biased, or abusive language at scale”.

- In November, OpenAI finally released its largest 1.5B-parameter model. The code is available here.

- Hugging Face has introduced a PyTorch implementation of the initially released GPT-2 model.

3. XLNet: Generalized Autoregressive Pretraining for Language Understanding, by Zhilin Yang, Zihang Dai, Yiming Yang, Jaime Carbonell, Ruslan Salakhutdinov, Quoc V. Le

Original Abstract

With the capability of modeling bidirectional contexts, denoising autoencoding based pretraining like BERT achieves better performance than pretraining approaches based on autoregressive language modeling. However, relying on corrupting the input with masks, BERT neglects dependency between the masked positions and suffers from a pretrain-finetune discrepancy. In light of these pros and cons, we propose XLNet, a generalized autoregressive pretraining method that (1) enables learning bidirectional contexts by maximizing the expected likelihood over all permutations of the factorization order and (2) overcomes the limitations of BERT thanks to its autoregressive formulation. Furthermore, XLNet integrates ideas from Transformer-XL, the state-of-the-art autoregressive model, into pretraining. Empirically, XLNet outperforms BERT on 20 tasks, often by a large margin, and achieves state-of-the-art results on 18 tasks including question answering, natural language inference, sentiment analysis, and document ranking.

Our Summary

The researchers from Carnegie Mellon University and Google have developed a new model, XLNet, for natural language processing (NLP) tasks such as reading comprehension, text classification, sentiment analysis, and others. XLNet is a generalized autoregressive pretraining method that leverages the best of both autoregressive language modeling (e.g., Transformer-XL) and autoencoding (e.g., BERT) while avoiding their limitations. The experiments demonstrate that the new model outperforms both BERT and Transformer-XL and achieves state-of-the-art performance on 18 NLP tasks.

What’s the core idea of this paper?

- XLNet combines the bidirectional capability of BERT with the autoregressive technology of Transformer-XL:

- Like BERT, XLNet uses a bidirectional context, which means it looks at the words before and after a given token to predict what it should be. To this end, XLNet maximizes the expected log-likelihood of a sequence with respect to all possible permutations of the factorization order.

- As an autoregressive language model, XLNet doesn’t rely on data corruption, and thus avoids BERT’s limitations due to masking – i.e., pretrain-finetune discrepancy and the assumption that unmasked tokens are independent of each other.

- To further improve architectural designs for pretraining, XLNet integrates the segment recurrence mechanism and relative encoding scheme of Transformer-XL.

What’s the key achievement?

- XLnet outperforms BERT on 20 tasks, often by a large margin.

- The new model achieves state-of-the-art performance on 18 NLP tasks including question answering, natural language inference, sentiment analysis, and document ranking.

What does the AI community think?

- The paper was accepted for oral presentation at NeurIPS 2019, the leading conference in artificial intelligence.

- “The king is dead. Long live the king. BERT’s reign might be coming to an end. XLNet, a new model by people from CMU and Google outperforms BERT on 20 tasks.” – Sebastian Ruder, a research scientist at Deepmind.

- “XLNet will probably be an important tool for any NLP practitioner for a while…[it is] the latest cutting-edge technique in NLP.” – Keita Kurita, Carnegie Mellon University.

What are future research areas?

- Extending XLNet to new areas, such as computer vision and reinforcement learning.

What are possible business applications?

- XLNet may assist businesses with a wide range of NLP problems, including:

- chatbots for first-line customer support or answering product inquiries;

- sentiment analysis for gauging brand awareness and perception based on customer reviews and social media;

- the search for relevant information in document bases or online, etc.

Where can you get implementation code?

- The authors have released the official Tensorflow implementation of XLNet.

- PyTorch implementation of the model is also available on GitHub.

4. RoBERTa: A Robustly Optimized BERT Pretraining Approach, by Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov

Original Abstract

Language model pretraining has led to significant performance gains but careful comparison between different approaches is challenging. Training is computationally expensive, often done on private datasets of different sizes, and, as we will show, hyperparameter choices have significant impact on the final results. We present a replication study of BERT pretraining (Devlin et al., 2019) that carefully measures the impact of many key hyperparameters and training data size. We find that BERT was significantly undertrained, and can match or exceed the performance of every model published after it. Our best model achieves state-of-the-art results on GLUE, RACE and SQuAD. These results highlight the importance of previously overlooked design choices, and raise questions about the source of recently reported improvements. We release our models and code.

Our Summary

Natural language processing models have made significant advances thanks to the introduction of pretraining methods, but the computational expense of training has made replication and fine-tuning parameters difficult. In this study, Facebook AI and the University of Washington researchers analyzed the training of Google’s Bidirectional Encoder Representations from Transformers (BERT) model and identified several changes to the training procedure that enhance its performance. Specifically, the researchers used a new, larger dataset for training, trained the model over far more iterations, and removed the next sequence prediction training objective. The resulting optimized model, RoBERTa (Robustly Optimized BERT Approach), matched the scores of the recently introduced XLNet model on the GLUE benchmark.

What’s the core idea of this paper?

- The Facebook AI research team found that BERT was significantly undertrained and suggested an improved recipe for its training, called RoBERTa:

- More data: 160GB of text instead of the 16GB dataset originally used to train BERT.

- Longer training: increasing the number of iterations from 100K to 300K and then further to 500K.

- Larger batches: 8K instead of 256 in the original BERT base model.

- Larger byte-level BPE vocabulary with 50K subword units instead of character-level BPE vocabulary of size 30K.

- Removing the next sequence prediction objective from the training procedure.

- Dynamically changing the masking pattern applied to the training data.

What’s the key achievement?

- RoBERTa outperforms BERT in all individual tasks on the General Language Understanding Evaluation (GLUE) benchmark.

- The new model matches the recently introduced XLNet model on the GLUE benchmark and sets a new state of the art in four out of nine individual tasks.

What are future research areas?

- Incorporating more sophisticated multi-task finetuning procedures.

What are possible business applications?

- Big pretrained language frameworks like RoBERTa can be leveraged in the business setting for a wide range of downstream tasks, including dialogue systems, question answering, document classification, etc.

Where can you get implementation code?

- The models and code used in this study are available on GitHub.

5. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations, by Zhenzhong Lan, Mingda Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma, Radu Soricut

Original Abstract

Increasing model size when pretraining natural language representations often results in improved performance on downstream tasks. However, at some point further model increases become harder due to GPU/TPU memory limitations, longer training times, and unexpected model degradation. To address these problems, we present two parameter-reduction techniques to lower memory consumption and increase the training speed of BERT. Comprehensive empirical evidence shows that our proposed methods lead to models that scale much better compared to the original BERT. We also use a self-supervised loss that focuses on modeling inter-sentence coherence, and show it consistently helps downstream tasks with multi-sentence inputs. As a result, our best model establishes new state-of-the-art results on the GLUE, RACE, and SQuAD benchmarks while having fewer parameters compared to BERT-large.

Our Summary

The Google Research team addresses the problem of the continuously growing size of the pretrained language models, which results in memory limitations, longer training time, and sometimes unexpectedly degraded performance. Specifically, they introduce A Lite BERT (ALBERT) architecture that incorporates two parameter-reduction techniques: factorized embedding parameterization and cross-layer parameter sharing. In addition, the suggested approach includes a self-supervised loss for sentence-order prediction to improve inter-sentence coherence. The experiments demonstrate that the best version of ALBERT sets new state-of-the-art results on GLUE, RACE, and SQuAD benchmarks while having fewer parameters than BERT-large.

What’s the core idea of this paper?

- It is not reasonable to further improve language models by making them larger because of memory limitations of available hardware, longer training times, and unexpected degradation of model performance with the increased number of parameters.

- To address this problem, the researchers introduce the ALBERT architecture that incorporates two parameter-reduction techniques:

- factorized embedding parameterization, where the size of the hidden layers is separated from the size of vocabulary embeddings by decomposing the large vocabulary-embedding matrix into two small matrices;

- cross-layer parameter sharing to prevent the number of parameters from growing with the depth of the network.

- The performance of ALBERT is further improved by introducing the self-supervised loss for sentence-order prediction to address BERT’s limitations with regard to inter-sentence coherence.

What’s the key achievement?

- With the introduced parameter-reduction techniques, the ALBERT configuration with 18× fewer parameters and 1.7× faster training compared to the original BERT-large model achieves only slightly worse performance.

- The much larger ALBERT configuration, which still has fewer parameters than BERT-large, outperforms all of the current state-of-the-art language modes by getting:

- 89.4% accuracy on the RACE benchmark;

- 89.4 score on the GLUE benchmark; and

- An F1 score of 92.2 on the SQuAD 2.0 benchmark.

What does the AI community think?

- The paper has been submitted to ICLR 2020 and is available on the OpenReview forum, where you can see the reviews and comments of NLP experts. The reviewers are mainly very appreciative of the presented paper.

What are future research areas?

- Speeding up training and inference through methods like sparse attention and block attention.

- Further improving the model performance through hard example mining, more efficient model training, and other approaches.

What are possible business applications?

- The ALBERT language model can be leveraged in the business setting to improve performance on a wide range of downstream tasks, including chatbot performance, sentiment analysis, document mining, and text classification.

Where can you get implementation code?

- The original implementation of ALBERT is available on GitHub.

- A TensorFlow implementation of ALBERT is also available here.

- A PyTorch implementation of ALBERT can be found here and here.

6. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer, by Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, Peter J. Liu

Original Abstract

Transfer learning, where a model is first pre-trained on a data-rich task before being fine-tuned on a downstream task, has emerged as a powerful technique in natural language processing (NLP). The effectiveness of transfer learning has given rise to a diversity of approaches, methodology, and practice. In this paper, we explore the landscape of transfer learning techniques for NLP by introducing a unified framework that converts every language problem into a text-to-text format. Our systematic study compares pre-training objectives, architectures, unlabeled datasets, transfer approaches, and other factors on dozens of language understanding tasks. By combining the insights from our exploration with scale and our new “Colossal Clean Crawled Corpus”, we achieve state-of-the-art results on many benchmarks covering summarization, question answering, text classification, and more. To facilitate future work on transfer learning for NLP, we release our dataset, pre-trained models, and code.

Our Summary

The Google research team suggests a unified approach to transfer learning in NLP with the goal to set a new state of the art in the field. To this end, they propose treating each NLP problem as a “text-to-text” problem. Such a framework allows using the same model, objective, training procedure, and decoding process for different tasks, including summarization, sentiment analysis, question answering, and machine translation. The researchers call their model a Text-to-Text Transfer Transformer (T5) and train it on the large corpus of web-scraped data to get state-of-the-art results on a number of NLP tasks.

What’s the core idea of this paper?

- The paper has several important contributions:

- Providing a comprehensive perspective on where the NLP field stands by exploring and comparing existing techniques.

- Introducing a new approach to transfer learning in NLP by suggesting treating every NLP problem as a text-to-text task:

- The model understands which tasks should be performed thanks to the task-specific prefix added to the original input sentence (e.g., “translate English to German:”, “summarize:”).

- Presenting and releasing a new dataset consisting of hundreds of gigabytes of clean web-scraped English text, the Colossal Clean Crawled Corpus (C4).

- Training a large (up to 11B parameters) model, called Text-to-Text Transfer Transformer (T5) on the C4 dataset.

What’s the key achievement?

- The T5 model with 11 billion parameters achieved state-of-the-art performance on 17 out of 24 tasks considered, including:

- a GLUE score of 89.7 with substantially improved performance on CoLA, RTE, and WNLI tasks;

- an Exact Match score of 90.06 on the SQuAD dataset;

- a SuperGLUE score of 88.9, which is a very significant improvement over the previous state-of-the-art result (84.6) and very close to human performance (89.8);

- a ROUGE-2-F score of 21.55 on the CNN/Daily Mail abstractive summarization task.

What are future research areas?

- Researching the methods to achieve stronger performance with cheaper models.

- Exploring more efficient knowledge extraction techniques.

- Further investigating the language-agnostic models.

What are possible business applications?

- Even though the introduced model has billions of parameters and can be too heavy to be applied in the business setting, the presented ideas can be used to improve the performance on different NLP tasks, including summarization, question answering, and sentiment analysis.

Where can you get implementation code?

- The pretrained models together with the dataset and code are released on GitHub.

7. Language Models are Few-Shot Learners, by Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, Dario Amodei

Original Abstract

Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic in architecture, this method still requires task-specific fine-tuning datasets of thousands or tens of thousands of examples. By contrast, humans can generally perform a new language task from only a few examples or from simple instructions – something which current NLP systems still largely struggle to do. Here we show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art fine-tuning approaches. Specifically, we train GPT-3, an autoregressive language model with 175 billion parameters, 10× more than any previous non-sparse language model, and test its performance in the few-shot setting. For all tasks, GPT-3 is applied without any gradient updates or fine-tuning, with tasks and few-shot demonstrations specified purely via text interaction with the model. GPT-3 achieves strong performance on many NLP datasets, including translation, question-answering, and cloze tasks, as well as several tasks that require on-the-fly reasoning or domain adaptation, such as unscrambling words, using a novel word in a sentence, or performing 3-digit arithmetic. At the same time, we also identify some datasets where GPT-3’s few-shot learning still struggles, as well as some datasets where GPT-3 faces methodological issues related to training on large web corpora. Finally, we find that GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans. We discuss broader societal impacts of this finding and of GPT-3 in general.

Our Summary

The OpenAI research team draws attention to the fact that the need for a labeled dataset for every new language task limits the applicability of language models. Considering that there is a wide range of possible tasks and it’s often difficult to collect a large labeled training dataset, the researchers suggest an alternative solution, which is scaling up language models to improve task-agnostic few-shot performance. They test their solution by training a 175B-parameter autoregressive language model, called GPT-3, and evaluating its performance on over two dozen NLP tasks. The evaluation under few-shot learning, one-shot learning, and zero-shot learning demonstrates that GPT-3 achieves promising results and even occasionally outperforms the state of the art achieved by fine-tuned models.

What’s the core idea of this paper?

- The GPT-3 model uses the same model and architecture as GPT-2, including the modified initialization, pre-normalization, and reversible tokenization.

- However, in contrast to GPT-2, it uses alternating dense and locally banded sparse attention patterns in the layers of the transformer, as in the Sparse Transformer.

- The model is evaluated in three different settings:

- Few-shot learning, when the model is given a few demonstrations of the task (typically, 10 to 100) at inference time but with no weight updates allowed.

- One-shot learning, when only one demonstration is allowed, together with a natural language description of the task.

- Zero-shot learning, when no demonstrations are allowed and the model has access only to a natural language description of the task.

What’s the key achievement?

- The GPT-3 model without fine-tuning achieves promising results on a number of NLP tasks, and even occasionally surpasses state-of-the-art models that were fine-tuned for that specific task:

- On the CoQA benchmark, 81.5 F1 in the zero-shot setting, 84.0 F1 in the one-shot setting, and 85.0 F1 in the few-shot setting, compared to the 90.7 F1 score achieved by fine-tuned SOTA.

- On the TriviaQA benchmark, 64.3% accuracy in the zero-shot setting, 68.0% in the one-shot setting, and 71.2% in the few-shot setting, surpassing the state of the art (68%) by 3.2%.

- On the LAMBADA dataset, 76.2 % accuracy in the zero-shot setting, 72.5% in the one-shot setting, and 86.4% in the few-shot setting, surpassing the state of the art (68%) by 18%.

- The news articles generated by the 175B-parameter GPT-3 model are hard to distinguish from real ones, according to human evaluations (with accuracy barely above the chance level at ~52%).

What are future research areas?

- Improving pre-training sample efficiency.

- Exploring how few-shot learning works.

- Distillation of large models down to a manageable size for real-world applications.

What does the AI community think?

- “The GPT-3 hype is way too much. It’s impressive (thanks for the nice compliments!) but it still has serious weaknesses and sometimes makes very silly mistakes. AI is going to change the world, but GPT-3 is just a very early glimpse. We have a lot still to figure out.” – Sam Altman, CEO and co-founder of OpenAI.

- “I’m shocked how hard it is to generate text about Muslims from GPT-3 that has nothing to do with violence… or being killed…” – Abubakar Abid, CEO and founder of Gradio.

- “No. GPT-3 fundamentally does not understand the world that it talks about. Increasing corpus further will allow it to generate a more credible pastiche but not fix its fundamental lack of comprehension of the world. Demos of GPT-4 will still require human cherry picking.” – Gary Marcus, CEO and founder of Robust.ai.

- “Extrapolating the spectacular performance of GPT3 into the future suggests that the answer to life, the universe and everything is just 4.398 trillion parameters.” – Geoffrey Hinton, Turing Award winner.

What are possible business applications?

- The model with 175B parameters is hard to apply to real business problems due to its impractical resource requirements, but if the researchers manage to distill this model down to a workable size, it could be applied to a wide range of language tasks, including question answering and ad copy generation.

Where can you get implementation code?

- The code itself is not available, but some dataset statistics together with unconditional, unfiltered 2048-token samples from GPT-3 are released on GitHub.

8. ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators, by Kevin Clark, Minh-Thang Luong, Quoc V. Le, Christopher D. Manning

Original Abstract

Masked language modeling (MLM) pre-training methods such as BERT corrupt the input by replacing some tokens with [MASK] and then train a model to reconstruct the original tokens. While they produce good results when transferred to downstream NLP tasks, they generally require large amounts of compute to be effective. As an alternative, we propose a more sample-efficient pre-training task called replaced token detection. Instead of masking the input, our approach corrupts it by replacing some tokens with plausible alternatives sampled from a small generator network. Then, instead of training a model that predicts the original identities of the corrupted tokens, we train a discriminative model that predicts whether each token in the corrupted input was replaced by a generator sample or not. Thorough experiments demonstrate this new pre-training task is more efficient than MLM because the task is defined over all input tokens rather than just the small subset that was masked out. As a result, the contextual representations learned by our approach substantially outperform the ones learned by BERT given the same model size, data, and compute. The gains are particularly strong for small models; for example, we train a model on one GPU for 4 days that outperforms GPT (trained using 30× more compute) on the GLUE natural language understanding benchmark. Our approach also works well at scale, where it performs comparably to RoBERTa and XLNet while using less than 1/4 of their compute and outperforms them when using the same amount of compute.

Our Summary

The pre-training task for popular language models like BERT and XLNet involves masking a small subset of unlabeled input and then training the network to recover this original input. Even though it works quite well, this approach is not particularly data-efficient as it learns from only a small fraction of tokens (typically ~15%). As an alternative, the researchers from Stanford University and Google Brain propose a new pre-training task called replaced token detection. Instead of masking, they suggest replacing some tokens with plausible alternatives generated by a small language model. Then, the pre-trained discriminator is used to predict whether each token is an original or a replacement. As a result, the model learns from all input tokens instead of the small masked fraction, making it much more computationally efficient. The experiments confirm that the introduced approach leads to significantly faster training and higher accuracy on downstream NLP tasks.

What’s the core idea of this paper?

- Pre-training methods that are based on masked language modeling are computationally inefficient as they use only a small fraction of tokens for learning.

- Researchers propose a new pre-training task called replaced token detection, where:

- some tokens are replaced by samples from a small generator network;

- a model is pre-trained as a discriminator to distinguish between original and replaced tokens.

- The introduced approach, called ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately):

- enables the model to learn from all input tokens instead of the small masked-out subset;

- is not adversarial, despite the similarity to GAN, as the generator producing tokens for replacement is trained with maximum likelihood.

What’s the key achievement?

- Demonstrating that the discriminative task of distinguishing between real data and challenging negative samples is more efficient than existing generative methods for language representation learning.

- Introducing a model that substantially outperforms state-of-the-art approaches while requiring less pre-training compute:

- ELECTRA-Small gets a GLUE score of 79.9 and outperforms a comparably small BERT model with a score of 75.1 and a much larger GPT model with a score of 78.8.

- An ELECTRA model that performs comparably to XLNet and RoBERTa uses only 25% of their pre-training compute.

- ELECTRA-Large outscores the alternative state-of-the-art models on the GLUE and SQuAD benchmarks while still requiring less pre-training compute.

What does the AI community think?

- The paper was selected for presentation at ICLR 2020, the leading conference in deep learning.

What are possible business applications?

- Because of its computational efficiency, the ELECTRA approach can make the application of pre-trained text encoders more accessible to business practitioners.

Where can you get implementation code?

- The original TensorFlow implementation and pre-trained weights are released on GitHub.

9. DeBERTa: Decoding-enhanced BERT with Disentangled Attention, by Pengcheng He, Xiaodong Liu, Jianfeng Gao, Weizhu Chen

Original Abstract

Recent progress in pre-trained neural language models has significantly improved the performance of many natural language processing (NLP) tasks. In this paper we propose a new model architecture DeBERTa (Decoding-enhanced BERT with disentangled attention) that improves the BERT and RoBERTa models using two novel techniques. The first is the disentangled attention mechanism, where each word is represented using two vectors that encode its content and position, respectively, and the attention weights among words are computed using disentangled matrices on their contents and relative positions, respectively. Second, an enhanced mask decoder is used to incorporate absolute positions in the decoding layer to predict the masked tokens in model pre-training. In addition, a new virtual adversarial training method is used for fine-tuning to improve models’ generalization. We show that these techniques significantly improve the efficiency of model pre-training and the performance of both natural language understanding (NLU) and natural language generation (NLG) downstream tasks. Compared to RoBERTa-Large, a DeBERTa model trained on half of the training data performs consistently better on a wide range of NLP tasks, achieving improvements on MNLI by +0.9% (90.2% vs. 91.1%), on SQuAD v2.0 by +2.3% (88.4% vs. 90.7%) and RACE by +3.6% (83.2% vs. 86.8%). Notably, we scale up DeBERTa by training a larger version that consists of 48 Transform layers with 1.5 billion parameters. The significant performance boost makes the single DeBERTa model surpass the human performance on the SuperGLUE benchmark (Wang et al., 2019a) for the first time in terms of macro-average score (89.9 versus 89.8), and the ensemble DeBERTa model sits atop the SuperGLUE leaderboard as of January 6, 2021, outperforming the human baseline by a decent margin (90.3 versus 89.8).

Our Summary

The authors from Microsoft Research propose DeBERTa, with two main improvements over BERT, namely disentangled attention and an enhanced mask decoder. DeBERTa has two vectors representing a token/word by encoding content and relative position respectively. The self-attention mechanism in DeBERTa processes self-attention of content-to-content, content-to-position, and also position-to-content, while the self-attention in BERT is equivalent to only having the first two components. The authors hypothesize that position-to-content self-attention is also needed to comprehensively model relative positions in a sequence of tokens. Furthermore, DeBERTa is equipped with an enhanced mask decoder, where the absolute position of the token/word is also given to the decoder along with the relative information. A single scaled-up variant of DeBERTa surpasses the human baseline on the SuperGLUE benchmark for the first time. The ensemble DeBERTa is the top-performing method on SuperGLUE at the time of this publication.

What’s the core idea of this paper?

- Disentangled attention: In the original BERT, the content embedding and position embedding are added before self-attention and the self-attention is applied only on the output of content and position vectors. The authors hypothesize that this only accounts for content-to-content self-attention and content-to-position self-attention and that we need position-to-content self-attention as well to model position information completely. DeBERTa has two separate vectors representing content and position and self-attention is calculated between all possible pairs, i.e., content-to-content, content-to-position, position-to-content, and position-to-position. Position-to-position self-attention is trivially 1 all the time and has no information, so it is not computed.